Node.js is an excellent framework that you can use to develop backend services. It takes its basis on Google Chrome’s JavaScript V8 Engine. Today, we will talk about the Node JS Architecture.

Node.js is highly preferred for creating I/O intensive web applications such as single-page applications, online chat applications, and video streaming websites, among others.

All the companies make use of it. It is open-source, free, and used by many node.js developers.

When you use it correctly, you improve the stability, decrease the chances of duplication of code, and eventually expand your services. Node.js Application Examples are proof of it.

With its significant advantages, it is usually a step ahead of server-side platforms like Java or PHP.

Things To Know About Node.js Architecture

JavaScript on Browser and JavaScript Runtimes

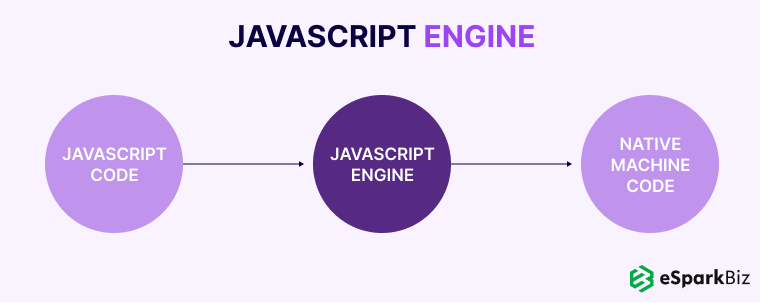

Top Node.js development company is fond of using JavaScript language for web pages and to work on web pages’ interactivity. This requires that browsers understands the JavaScript code to display as web pages. JavaScript Engine makes this possible.

To execute the JavaScript code, JavaScript Engine converts it into machine code. JavaScript Engines behaves like an interpreter and includes Memory Heap (stores objects of variables and functions), and Call Stack (to execute functions).

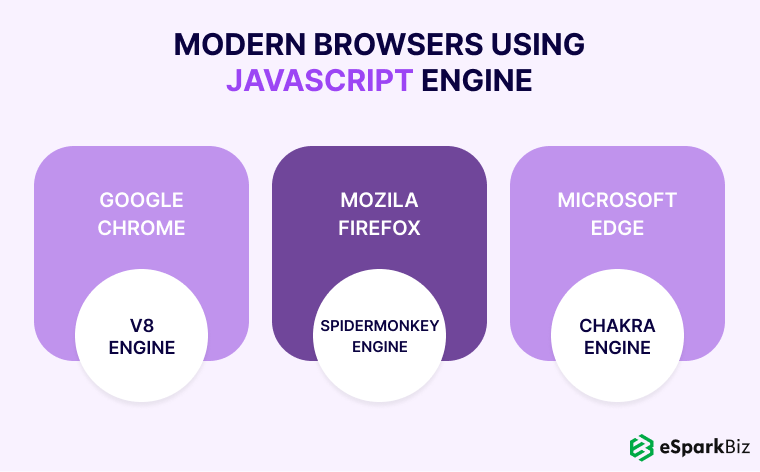

Modern browsers generate JavaScript runtime by using JavaScript Engine to transform web page JavaScript code. Different browsers use various JavaScript Engines that they utilize within their browser.

Example: Google Chrome uses a JavaScript engine called V8. The only reason behind building V8 is to convert ECMAScript (a JavaScript language) into the necessary machine code for the operating system where there is an installation of a browser.

Google Chrome will carry out certain other web APIs that browsers need such as window, document, etc. These are not in the JavaScript language, but elements that make up a web page.

You need to also remember that since different browsers utilize different JavaScript engines, they can interpret JavaScript code differently. This could lead to independent results.

Running JavaScript Without Browser

JScript is for frontend and one of the most accepted programming languages. Node.js is for writing backend applications for servers. Thus, you can use JScript for full-stack development.

In 2009, Ryan Dahl, an American software engineer, and the original developer of Node.js JScript runtime used the fastest JScript engine, Chrome’s V8, and operated it without a browser.

He used C++ to write wrapper code around the V8 engine and executed it directly on the operating system.

Node.js uses the V8 engine to translate JScript code into machine code. As Node.js is written for the operating system and not the browser, some of the web APIs for browsers such as Windows, the document is not executed.

Few OS-related functionalities such as fs, http are implemented in the Node.js standard library. In simpler terms, Node.js is a JScript runtime environment like a browser.

You should remember that Node.js does not support all JScript features or ECMAScript immediately, but features that are implemented in Chrome’s V8 engine.

Node.js JavaScript Runtime Environment

There are some common misunderstandings about Node.js:

- js is not a programming language. JScript is the language.

- js is not a framework for a server application. Express.js is a framework.

Node.js can be summarised as the following statement:

“Node.js® is a JavaScript runtime built on Chrome’s V8 JavaScript engine.”

The Importance of Good Architecture

You need to start your project architecture from a good point. It is crucial for the project and also decides how you will handle the dynamic requirements in the future.

When you have a chaotic architecture, it can head to the following problems:

- Unreadable and complex code, which will elongate the development process and product testing will become difficult.

- The unnecessary repetition will make the code difficult to maintain and manage.

- Problems in implementing a new feature. As the structure can be cluttered, it can become a real issue when you add a new feature without damaging the existing code.

These problems are very real and remind us why we need to focus on good project architecture. The following pointers can help you set objectives that this architecture must help you achieve:

- Get neat and readable code

- Achieve reusable pieces of code in the entire application

- Help in keeping away from repetitions

- Make the process of including a new feature hassle-free

Node.js Architecture and Notable Components

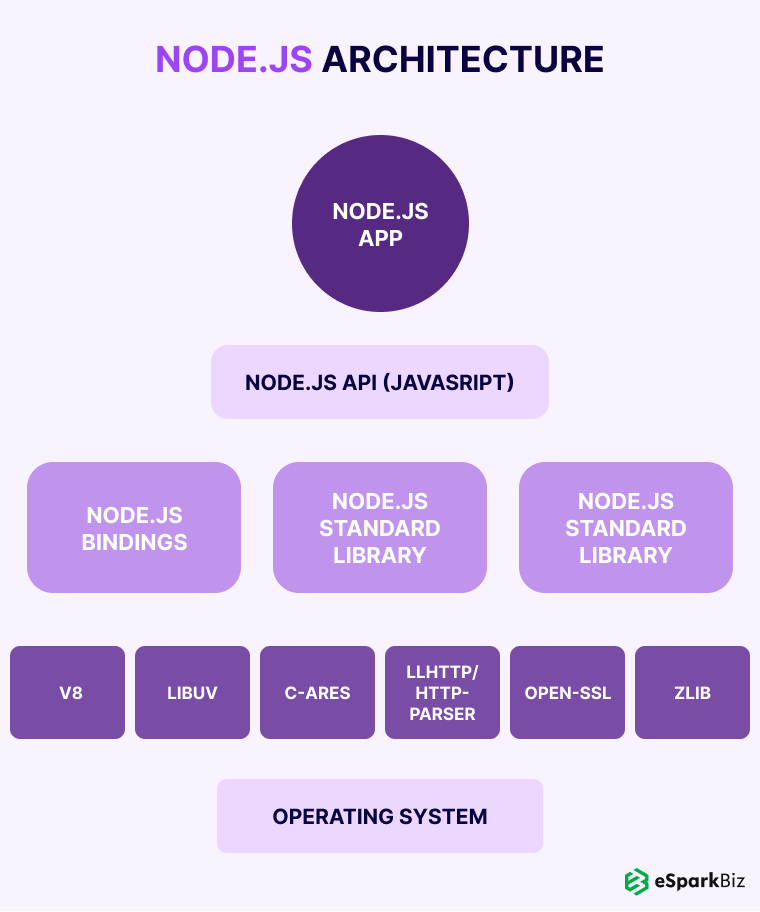

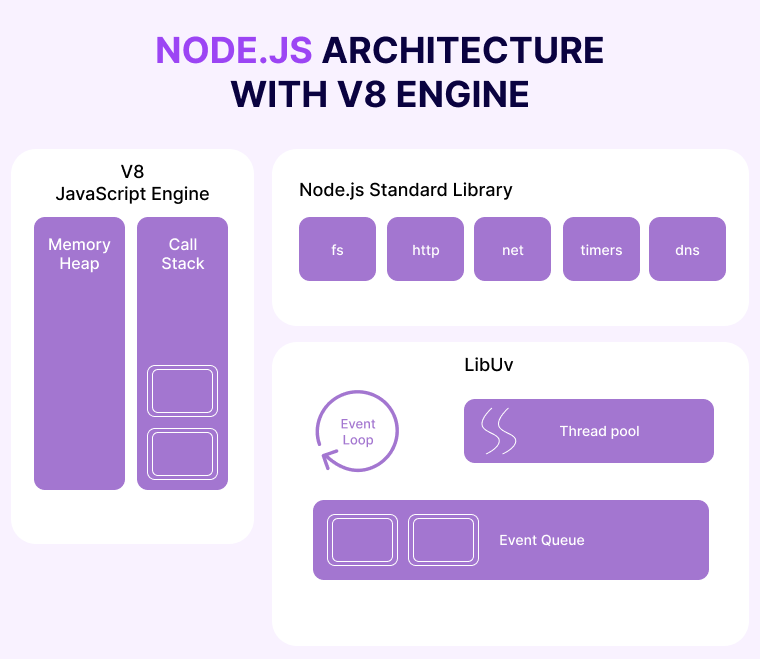

This is the Node JS architecture along with its major components.

Mentioned below is a short description of all components:

- V8 JScript engine: It contains Memory Heap, Call Stack, and Garbage Collector. It transforms JScript code into the machine code of the particular operating system.

- LibUV: It contains a Thread Pool and manages Event Loop and Event Queue. It is a multi-platform C library that focuses on asynchronous I/O operations.

- js standard library: It includes libraries operating system related functions for Timers setTimeout, File System fs, Network Calls http.

- llhttp: It is parsing HTTP request/response (earlier http-parse was used)

- c-ares: It is the C library for async DNS request used in the DNS module.

- open-SSL: It is the cryptographic functions used in the TLS (SSL), crypto modules.

- zlib: It is the interface to compress and decompress by sync, async, and streaming.

- js API: It is the exposed JScript API that is used by applications.

NodeJS Dependencies: These are the Dependencies | Node.js

NodeJS API Official Documentation: This is the Node.js v13.5.0 Documentation.

For example, node.exe can be executed for Windows OS where node.js can be used. These programs can be either executed as ./node.exe server.js or by accessing REPL directly.

Features of Node.js

Mentioned below are the features of Node.js that will help you understand it better.

- Single-Threaded: There is only one user thread for Node.js program execution.

- Non-blocking I/O: It does not wait till the I/O operation is complete.

- Asynchronous: It takes care of the dependent code later once it is finished.

Since Node.js is single-threaded,

- js programs are not much complicated as the sharing memory does not need to be locked or race conditions do not need to be managed.

- I/O operations are done asynchronously hence the single thread execution is not stopped. This is attained through the Event Loop.

- As there is an absence of multiple threads to support parallelism, CPU-intensive tasks are not appropriate.

Advantages of Node.js Architecture

The various advantages of Node JS architecture make the server-side platform much better in comparison to other server-side languages.

- Quickly and easily manages multiple concurrent client requests: Using Event Queue and Thread Pool, the Node.js server facilitates structured management of a great number of incoming requests.

- Creating multiple threads is not a necessity: As the Event Loop manages all requests in order, there is no requirement to create multiple threads. Rather, a single thread is enough to handle a blocking incoming request.

- Needs lesser memory and resources: Due to the manner in which Node.js server handles the incoming requests, usually, it needs lesser memory and resources. As the requests are handled one at a time, this process does not strain the memory.

Due to these benefits, the servers created using Node.js are quicker and responsive in comparison to the servers developed using other development technologies.

JavaScript Execution and Call Stack

JScript engines include multiple call stacks. JScript includes only one call stack as it is single-threaded. When a browser or Node.js executes the JScript code, it should adhere to the order in which the program is defined.

For example:

const add = (a, b) => a + b;

const multiply = (a, b) => a * b;

const addCofficient = (val) => multiply(val, 1.8);

const addConst = (val) => add(val, 32);

const convertCtoF = (val) => {

let result = val;

result = addCofficient(result);

result = addConst(result);

return result;

};

convertCtoF(100);

Here in the execution order, when set into a function, it will be pushed into the call stack. If any function is present within that function, then it will be pushed into the stack on the top of what was earlier in the call stack.

If there is no function to be pushed, it will carry out the function on the top of the call stack. After its execution, while coming back from the function, it will be popped from the call stack. So the call stack: first in last out (FILO) records the functions that are being executed.

In the browser or Node.js, if an error or exception is found, it would display error stack trace, or every call stack frame. This will show the call stack status when debugging.

Checking with undefined variables: add(val, 32) → add(val, thirtytwo).

ReferenceError: thirtytwo is not defined at addConst:5:32 at convertCtoF:10:12 at eval:14:1

Checking with recursion without condition: addConst is using addConst again.

RangeError: Maximum call stack size exceeded at addConst:5:16 at addConst:5:23 at addConst:5:23

Call Stack With Slow I/O and Blocking Nature

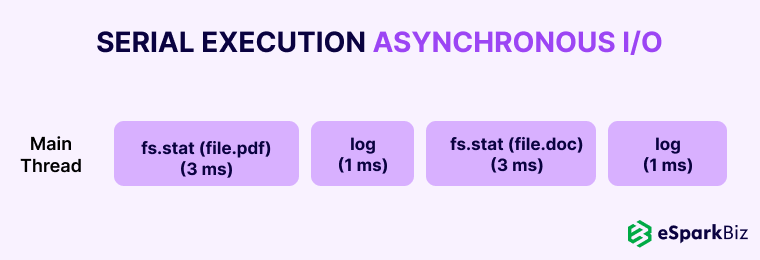

V8’s call stack is synchronous. Suppose there is a slow operation like a network or file system, it will stop the execution of a single-thread and stand by till its operation is over. Thus, I/O operations get blocked if it is executed using just a call stack.

const pdf = fs.readFile(file.pdf) console.log(pdf.size) const doc = fs.readFile(file.doc) console.log(doc.size)

In the above-mentioned example, file.pdf is read first then the thread is put on hold there till it is over. Here file.doc could be also read simultaneously as it does not rely on the first function call but due to the call stack, it is executed after file.pdf if over.

Mentioned below are the ways to manage slow I/O operations:

- Synchronous: This holds up the process and is not apt for single-threaded.

- Fork: This clones the new process. This does not increase properly and is highly-priced.

- Threads: This is complex for shared memory and requires multiple threads.

- Event Loop: This is asynchronous callback programming and is right for single-threaded.

Here, Node.js is going with Event Loop to manage I/O operations asynchronously as it is single-threaded.

Callbacks are designed to handle such operations so that they can run simultaneously. Here the callback function is to be executed when the first function is done. Hence, the callback function will involve code that depends on a slow I/O call.

const pdf = fs.readFile(file.pdf) Then → console.log(pdf.size) const doc = fs.readFile(file.doc) Then → console.log(doc.size)

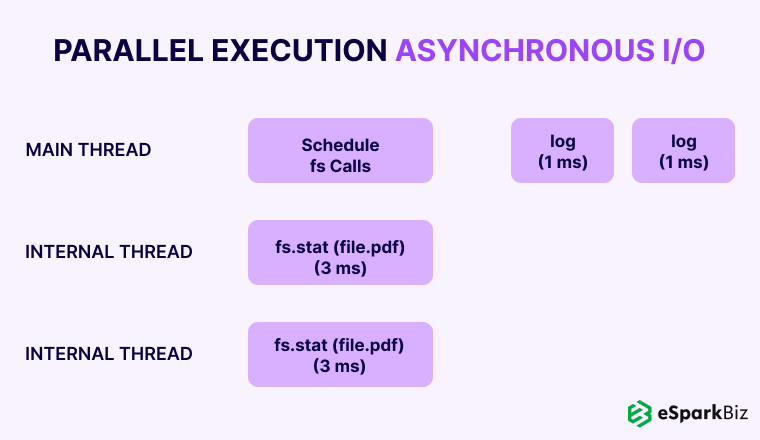

Note: Even if JScript only uses one thread for executions and call stack, it needs to be remembered that Node.js has an internal thread pool. Hence, there will be no restriction on executing multiple I/O operations parallelly.

Asynchronous Non-Blocking I/O

In the above content, it is mentioned that Node.js uses an asynchronous callback programming model to handle I/O operations to free single thread.

Any external operation could show delays linked with a callback function that is to be carried out when the slow operation is complete. Thread Pool executes these events parallelly and Event Loop handles the callbacks.

The Event Loop lets Node.js carry out non-blocking I/O operations by offloading operations to the system kernel whenever it is possible. This is irrespective of the fact that JScript is single-threaded.

The below mentioned diagram demonstrates how major components interact to give asynchronous I/O:

- Call stack would execute as explained above but if there are sanctions with delay, it would be blocked there. Instead, it would fire that event using an associated callback function and will resume with the remaining code.

- The executed external function will run in the background (not in the main single thread) using the libuv thread pool. For example, fs read file command will be carried out using Node.js library in the background thread.

When it is completed, it will add that event and callback to the Event Queue or Callback Queue.

- This Event Queue contains all callback functions of finished functions that are in stand by to be executed by the main thread. The callback function is executed by the main thread and is moved to the call stack.

- The Event Loops comes into the picture and transfers callback functions from the Event Queue to call stack to be executed by the main thread,

- When the call stack is clear and the Event Queue has pending functions, Event Loop moves the event and its callback from the Event Queue to the call stack will be executed by the main thread.

Thus, none of the slow I/O operations will block the main thread. Instead, they pass to Node.js to be executed in the background.

Hence, the main thread continues with its program in non-blocking nature. Once the I/O operation is finished, its callback will be executed by the main thread using Event Loop and Event Queue.

Node.js Concurrency Model

Node.js supports concurrency as through Event Loop and Event Queue, it has created an asynchronous event-driven non-blocking I/O model.

As it is the single-threaded JScript, it does not stop the main thread for unrushed external events but it converts the external events into callback invocations.

This is how JScript code handles I/O operation asynchronously:

console.log("BEFORE TIMEOUT FUNCTION");

setTimeout(function timeout() {

console.log("TIMEOUT FUNCTION");

}, 5000);

console.log("AFTER TIMEOUT FUNCTION");

Following will be the output where callback function is delayed

BEFORE TIMEOUT FUNCTION → AFTER TIMEOUT FUNCTION → TIMEOUT FUNCTION

I/O Operations By Node.js Library

Here we explain what Node.js views as I/O operation and it carries out by internal thread pool without obstructing the main thread.

Few important I/O operations in Node.js are not a part of the JScript specifications in ECMAScript hence they are not executed by the JScript engine (These are either executed by Node.js or the browser).

An example of Node.js is, timers are used in lib/timers.js that are implemented in src/timers.cc and build an instance of Timeout object.

For example, setTimeout is implemented in both, nodejsNode.js and the browsers. It will be relative to the window in the browser, and global object in Node.js.

- js File Systems

These are the external operations handled by the fs module such as reading or writing to files. For example, fs.write, fs.readStream, and fs.stat.

- js Network Calls

These are the network calls for any external party such as dns.resolve, dns.lookup, http.get, https.post, and socket.connect.

- js Timers

These are time-related operations that are meant to be handled later such as setTimeout, setImmediate, or setInterval. Even setTimeout(cb, 0) with 0 ms delay will be held up as it is pushed to the Event Queue and executed after other code in the call stack.

Hence, this setTimeout does not promise an exact time but shares the minimum time required for execution.

You need to remember that all time-consuming tasks are not viewed as I/O operations. For example, the CPU intensive operation in a loop is not an I/O operation and it would block the main thread. Thus, Node.js is not viewed as apt for CPU intensive tasks but for I/O intensive tasks.

for (var i = 0; i < 10000; i++) {

crypto.createHash(...) or sleep(2) || CPU intensive task

}

console.log('After CPU Intensive Task');

In this, the CPU intensive task blocks the main thread and does not carry out console the way it would with asynchronous operations. When a CPU bound task is met, it is carried out instantly, and for I/O bound tasks, it is passed to libuv where it is taken care of asynchronously.

You should remember that if a call stack is not free because of these CPU bound tasks and blocking the main thread, then it will never carry out anything from the Event Queue of any of the pending I/O tasks. This would lead to exhaustion.

Rules For Structuring Node.js App

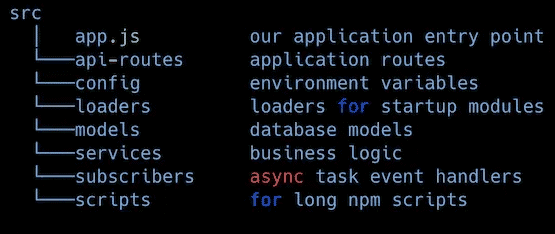

Rule 1: Organizing Files Into Folders

Every file in the application should be in a folder. This folder will contain a group of common elements.

This way, the segregation of different files is neat and categorised. So, this is the first rule for Node App Structure that you should take into consideration.

Rule 2: The 3 Layer Architecture

Here the objective is to implement the principle of separation of concerns to shift the business logic from the Node.js API routes.

The reason behind this is that this will be convenient when you want to utilise your business logic on a CLI tool or in a recurring task. This will prevent the API call from the Node.js server to itself.

Rule 3: Separation Between Business Logic & API

Frameworks like Express.js are great as they give us spectacular features for handling requests, views, and routes. When you get such great support, you might want to put your business logic into your API routes.

It will be a significant mistake as it will form rigid blocks that would be unmanageable, difficult to read, and open to damage.

An example of what not to do:

route.post('/', async (req, res, next) => {

// This should be a middleware or should be handled by a library like Joi.

const userDTO = req.body;

const isUserValid = validators.user(userDTO)

if(!isUserValid) {

return res.status(400).end();

}

// Lot of business logic here...

const userRecord = await UserModel.create(userDTO);

delete userRecord.password;

delete userRecord.salt;

const companyRecord = await CompanyModel.create(userRecord);

const companyDashboard = await CompanyDashboard.create(userRecord, companyRecord);

...whatever...

// And here is the 'optimization' that mess up everything.

// The response is sent to client...

res.json({ user: userRecord, company: companyRecord });

// But code execution continues :(

const salaryRecord = await SalaryModel.create(userRecord, companyRecord);

eventTracker.track('user_signup',userRecord,companyRecord,salaryRecord);

intercom.createUser(userRecord);

gaAnalytics.event('user_signup',userRecord);

await EmailService.startSignupSequence(userRecord)

});

With regular and longer development times, the testability of your application will reduce. This would urge you to keep your business logic in a neat and smart way.

An example of what to do:

route.post('/',

validators.userSignup, // this middleware take care of validation

async (req, res, next) => {

// The actual responsibility of the route layer.

const userDTO = req.body;

// Call to service layer.

// Abstraction on how to access the data layer and the business logic.

const { user, company } = await UserService.Signup(userDTO);

// Return a response to client.

return res.json({ user, company });

});

This is how your service will operate in the background:

import UserModel from '../models/user';

import CompanyModel from '../models/company';

export default class UserService {

async Signup(user) {

const userRecord = await UserModel.create(user);

const companyRecord = await CompanyModel.create(userRecord); // needs userRecord to have the database id

const salaryRecord = await SalaryModel.create(userRecord, companyRecord); // depends on user and company to be created

...whatever

await EmailService.startSignupSequence(userRecord)

...do more stuff

return { user: userRecord, company: companyRecord };

}

}

Rule 4: Using a Service Layer

This is where you should put all your business logic. It is essentially a group of classes, each with its methods, that will implement your app’s core logic. In this layer, you need to ignore the part that accesses the database, that part needs to be handled by the data access layer.

The folder structure will become:

Rule 5: Using config Folder for Configuration File

Following the trustworthy concepts of Twelve-Factor App for Node.js, the top method to store API keys and database string connections is by using dotenv.

Put a .env file that should have the default values in your repository, then the npm package dotenv loads the .env file and inserts the vars into the process.env object of Node.js.

For a structure and code autocompletion, have a config/index.ts file where the dotenv npm package is and load the .env file. Then use an object to store the variables.

const dotenv = require('dotenv');

// config() will read your .env file, parse the contents, assign it to process.env.

dotenv.config();

export default {

port: process.env.PORT,

databaseURL: process.env.DATABASE_URI,

paypal: {

publicKey: process.env.PAYPAL_PUBLIC_KEY,

secretKey: process.env.PAYPAL_SECRET_KEY,

},

paypal: {

publicKey: process.env.PAYPAL_PUBLIC_KEY,

secretKey: process.env.PAYPAL_SECRET_KEY,

},

mailchimp: {

apiKey: process.env.MAILCHIMP_API_KEY,

sender: process.env.MAILCHIMP_SENDER,

}

}

This will save you from flooding your code with process.env.MY_RANDOM_VAR instructions. With the set code autocompletion, it becomes okay if you do not know how to name the env var.

Rule 6: A scripts Folder for NPM Scripts

Having a separate scripts folder for the NPM Scripts will definitely help you to organize the node.js project in a better way. It reduces the workload and eases out the pressure. This one of the vital Node JS App Structure rules that you need to follow.

Rule 7: Using Dependency Injection

Node.js comes with great features and tools. Although, operating with dependencies could cause complications most often as issues can pop up with testability and code manageability. To solve this problem, dependency injection could be of great help.

Dependency injection is a software design pattern in which there is an injection of one or more dependencies (or services)or one can pass them by reference, into a dependent object.

When you use them inside your Node applications, you get the following benefits:

- Have a simpler unit testing process and pass dependencies directly to the modules you would prefer to use instead of hardcoding them.

- Make maintenance effortless and avoid pointless module coupling.

- Give a quicker git-flow. Once you have defined your interfaces, they will stay like that, this way, you can avoid any merge conflicts.

Code with no dependency injection:

import UserModel from '../models/user';

import CompanyModel from '../models/company';

import SalaryModel from '../models/salary';

class UserService {

constructor(){}

Sigup(){

// Caling UserMode, CompanyModel, etc

...

}

}

Code with manual dependency injection:

export default class UserService {

constructor(userModel, companyModel, salaryModel){

this.userModel = userModel;

this.companyModel = companyModel;

this.salaryModel = salaryModel;

}

getMyUser(userId){

// models available throug 'this'

const user = this.userModel.findById(userId);

return user;

}

}

Injecting custom dependencies:

import UserService from '../services/user';

import UserModel from '../models/user';

import CompanyModel from '../models/company';

const salaryModelMock = {

calculateNetSalary(){

return 42;

}

}

const userServiceInstance = new UserService(userModel, companyModel, salaryModelMock);

const user = await userServiceInstance.getMyUser('12346');

An example of using typedi, an npm library that brings dependency injection to Node.js:

import { Service } from 'typedi';

@Service()

export default class UserService {

constructor(

private userModel,

private companyModel,

private salaryModel

){}

getMyUser(userId){

const user = this.userModel.findById(userId);

return user;

}

}

Now typedi will take care of resolving any dependency that the UserService requires.

import { Container } from 'typedi';

import UserService from '../services/user';

const userServiceInstance = Container.get(UserService);

const user = await userServiceInstance.getMyUser('12346');

Rule 8: Use Unit Testing

Using unit testing for your project is a great idea. Testing is a crucial step in app development. Your project’s flow and the final result depends on it.

Without testing, you could have errors in the code which would make the development process slow and create other problems.

A frequently used way to test applications is to test them by units. In this, you seclude a part of the code and check its correctness.

In procedural programming, a unit could be a single function or procedure. Developers, who write the code, usually perform this.

Following are the advantages of this approach:

- Better quality of code: Unit testing betters your code’s quality and enables you to look for problems that you might have overlooked before the code moves to the next stage of development. It highlights the edge cases and helps you write code efficiently.

- Bugs are found timely: You can find problems at an early stage. This will save you the lengthy process of debugging.

- Lesser costs: When you have lesser problems, you spend less time on debugging. This saves your time and also the cost spent on the process of debugging. With the saved time and money, you can invest the same in making your app better.

Example: Unit test for signup user method

import UserService from '../../../src/services/user';

describe('User service unit tests', () => {

describe('Signup', () => {

test('Should create user record and emit user_signup event', async () => {

const eventEmitterService = {

emit: jest.fn(),

};

const userModel = {

create: (user) => {

return {

...user,

_id: 'mock-user-id'

}

},

};

const companyModel = {

create: (user) => {

return {

owner: user._id,

companyTaxId: '12345',

}

},

};

const userInput= {

fullname: 'User Unit Test',

email: '[email protected]',

};

const userService = new UserService(userModel, companyModel, eventEmitterService);

const userRecord = await userService.SignUp(teamId.toHexString(), userInput);

expect(userRecord).toBeDefined();

expect(userRecord._id).toBeDefined();

expect(eventEmitterService.emit).toBeCalled();

});

})

})

Rule 9: Using Layer for Third Party Services

During the development process, you might want to use a third-party service to execute certain actions or retrieve specific data. It is essential that you segregate this call into another particular layer as it will save your code from becoming too big to handle.

A pub/sub pattern is to solve this issue. This method is a messaging pattern where you have entities to send messages. We know them as publishers or subscribers.

The messages would not reach directly to the specific receivers as publishers would not program them in such a manner. They will make groups of published messages into specific classes without the subscribers’ awareness, if any, may be dealing with them.

Similarly, the subscribers will show interest in handling one or more classes and get only the relevant messages. This could happen without the publishers knowing about it.

The publish-subscribe model allows event-driven architectures and asynchronous parallel processing while making the performance, reliability, and scalability better.

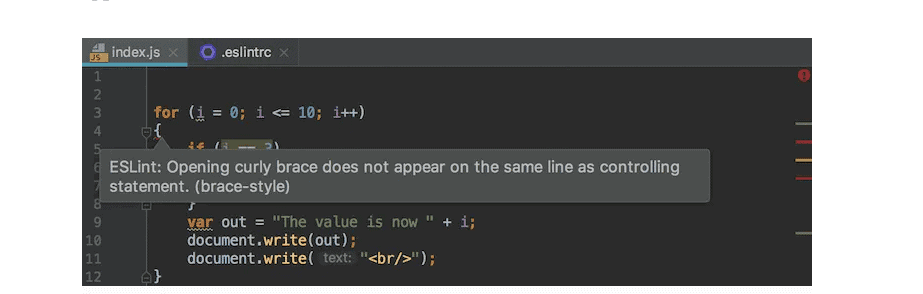

Rule 10: Use a Linter

This easy tool enables you to have a quicker and in general a better development process. This assists you in finding the small errors and at the same time, keeping the code uniform across the application.

Example: Using a linter

Rule 11: Use a Style Guideline

When you use a style guideline, you would be able to properly format your code in an appropriate way. It will make the process of reading code much simpler. This will save your time in understanding how to rightly position a curly brace.

Rule 12: Always Comment Your Code

There would be times when you would write a hard piece of code. During such times, keeping a track of what and why you are doing it would become tough.

In such cases, commenting will save you. It will not only help your colleagues when they go through the code but will also help you understand what you did when you wrote the code.

Rule 13: Check File Size

When files become heavy, they become difficult to handle and maintain. Always check your file size and if they are becoming longer, you should try to divide them into modules placed in a folder as files that are related together.

Rule 14: Use gzip Compression

To decrease latency and lag, the server can take the help of gzip compression to decrease file sizes before sending them to a web browser.

An example of using gzip compression with Express:

var compression = require (‘compression’); var express = require (‘express’); var app = express(); app.use (compression());

Rule 15: Use promises

To handle the asynchronous code in JScript, you can use callbacks. Although, raw callbacks could hamper the application control flow, error handling, and semantics that are common while using synchronous code. The answer to this problem is using promises in Node.js.

Promises make your code easier to read and test and at the same time, they offer functional programming semantics together with a better error-handling platform.

An example of a promise:

Const myPromise = new Promise ((resolve, reject) => {

If (condition) {

Resolve (‘success’);

}

Reject (new Error (‘In 10% of the cases, I Fail.’));

});

Rule 16: Use Error Handling Support

Errors are unavoidable while writing the code. How to efficiently deal with them is up to you. Along with using promises in your applications, you can also use the error handling support provided by the catch keyword.

myPromise.then ((resolvedValue) => {

console.log(resolvedValue);

}, (error) => }

console.log (error);

});

Rule 17: Use A Pub/Sub Layer

The simple Node.js API endpoint that only creates a user right now might want to use a third-party service, might be to an analytics service, or might initiate an email sequence.

Very soon you will find out that a simple ‘create’ operation would be doing multiple things. This hampers the principle of single responsibility.

Hence, there is a suggestion to segregate responsibilities from the beginning to keep your code manageable and maintainable.

Aspects to Be Considered for Designing Node.js Architecture

Type Safety

Developers many times have to deal with critical bugs reported during the application runtime. Usually, the bug involved calling a function with the wrong parameters. When you use type safety, you do not have to face this issue.

Separation of Concern

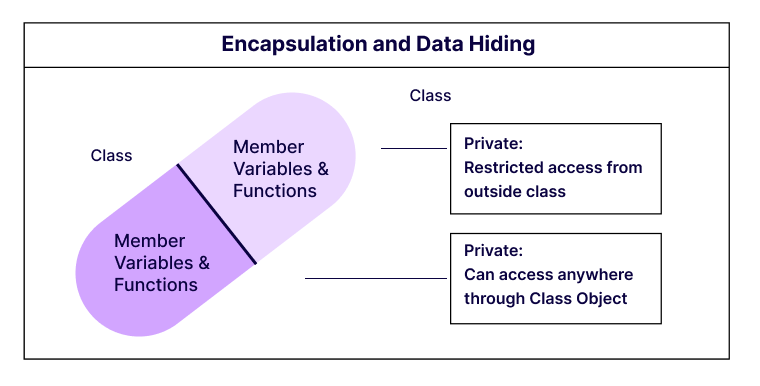

In the separation of concern, there is a definition of the role of every component. You can apply this principle to classes and files.

Feature Encapsulation

It means you collate the files related to a single feature together. This will help you in reusing your codebase in different projects. This enables you to create a logical structure in mind to look for a specific file when you are writing a code that requires it as a dependency.

Better Error Handling

This is a crucial application to be uniform with errors and the corresponding API responses. An error handling class will define all the possible error classes and their appropriate handling.

Better Response Handling

Just like better error handling, better response handling is crucial during the development process.

Better Promise Management

Promises have replaced the callback and the async/await has replaced the promise chain.

Robust Unit Tests

Writing tests is a mandatory exercise during the development process. You need to mock numerous classes if you have to take a unit test.

Simple Deployability

You can manually deploy the application. This is an important process.

How to Design a Node.js Architecture?

Setting Up Folder and Files

Start with creating your work folder and initial files.

$ mkdir node-starter $ cd node-starter $ touch index.js $ npm init -y

Creating The Structure

Make the base folders for the project.

$ mkdir config src src/controllers src/models src/services src/helpers

Add Dependencies

Add your initial dependencies.

$ npm install --save body-parser express mongoose mongoose-unique-validator slugify

Setting Up Babel

In the main folder, make a file called .babelrc using the following code:

{

"presets": [

"@babel/preset-env"

]

}

Now move to your package.json and add the following scripts:

"scripts": {

"start": "babel-node index.js",

"dev:start": "clear; nodemon --exec babel-node index.js"

}

Create the Server

In the config folder, make a file called server.js with the following code

import express from "express"; import bodyParser from "body-parser"; const server = express(); server.use(bodyParser.json()); export default server;

Now import the server config into the index.js file:

import server from './config/server';

const PORT = process.env.PORT || 5000;

server.listen(PORT, () => {

console.log(`app running on port ${PORT}`);

});

Now, you should be able to run your server with the following script:

$ npm run dev:start

You should receive a response like the following:

[nodemon] 1.19.4 [nodemon] to restart at any time, enter `rs` [nodemon] watching dir(s): *.* [nodemon] watching extensions: js,mjs,json [nodemon] starting `babel-node index.js` app running on port 5000

Setting Up Database

Setting up the database, under config, add the file database.js:

//database.js

import mongoose from "mongoose";

class Connection {

constructor() {

const url =

process.env.MONGODB_URI || `mongodb://localhost:27017/node-starter`;

console.log("Establish new connection with url", url);

mongoose.Promise = global.Promise;

mongoose.set("useNewUrlParser", true);

mongoose.set("useFindAndModify", false);

mongoose.set("useCreateIndex", true);

mongoose.set("useUnifiedTopology", true);

mongoose.connect(url);

}

}

export default new Connection();

Now, import it at the start of your index.js file.

//index.js import './config/database'; //...

Creating A Model

Create your first model. In the src/models, create a file called Post.js with the following content:

//src/models/Post.js

import mongoose, { Schema } from "mongoose";

import uniqueValidator from "mongoose-unique-validator";

import slugify from 'slugify';

class Post {

initSchema() {

const schema = new Schema({

title: {

type: String,

required: true,

},

slug: String,

subtitle: {

type: String,

required: false,

},

description: {

type: String,

required: false,

},

content: {

type: String,

required: true,

}

}, { timestamps: true });

schema.pre(

"save",

function(next) {

let post = this;

if (!post.isModified("title")) {

return next();

}

post.slug = slugify(post.title, "_");

console.log('set slug', post.slug);

return next();

},

function(err) {

next(err);

}

);

schema.plugin(uniqueValidator);

mongoose.model("posts", schema);

}

getInstance() {

this.initSchema();

return mongoose.model("posts");

}

}

export default Post;

Create Services

Create a Service class with all the common functionalities for the API, this way the other services can also use them.

Create a file Service.js under src/services folder;

//src/services/Service.js

import mongoose from "mongoose";

class Service {

constructor(model) {

this.model = model;

this.getAll = this.getAll.bind(this);

this.insert = this.insert.bind(this);

this.update = this.update.bind(this);

this.delete = this.delete.bind(this);

}

async getAll(query) {

let { skip, limit } = query;

skip = skip ? Number(skip) : 0;

limit = limit ? Number(limit) : 10;

delete query.skip;

delete query.limit;

if (query._id) {

try {

query._id = new mongoose.mongo.ObjectId(query._id);

} catch (error) {

console.log("not able to generate mongoose id with content", query._id);

}

}

try {

let items = await this.model

.find(query)

.skip(skip)

.limit(limit);

let total = await this.model.count();

return {

error: false,

statusCode: 200,

data: items,

total

};

} catch (errors) {

return {

error: true,

statusCode: 500,

errors

};

}

}

async insert(data) {

try {

let item = await this.model.create(data);

if (item)

return {

error: false,

item

};

} catch (error) {

console.log("error", error);

return {

error: true,

statusCode: 500,

message: error.errmsg || "Not able to create item",

errors: error.errors

};

}

}

async update(id, data) {

try {

let item = await this.model.findByIdAndUpdate(id, data, { new: true });

return {

error: false,

statusCode: 202,

item

};

} catch (error) {

return {

error: true,

statusCode: 500,

error

};

}

}

async delete(id) {

try {

let item = await this.model.findByIdAndDelete(id);

if (!item)

return {

error: true,

statusCode: 404,

message: "item not found"

};

return {

error: false,

deleted: true,

statusCode: 202,

item

};

} catch (error) {

return {

error: true,

statusCode: 500,

error

};

}

}

}

export default Service;

Now, create the Post service and inherit all this functionality that you just create.

Under src/services, create a file PostService.js with the following content:

//src/services/PostService

import Service from './Service';

class PostService extends Service {

constructor(model) {

super(model);

}

}

export default PostService;

Read also: Node.js vs ASP.NET : Which Is One Is Best For Enterprise App Development ?

Create The Controllers

Create a file Controller.js under src/controllers and add the following code:

//src/controllers/Controller.js

class Controller {

constructor(service) {

this.service = service;

this.getAll = this.getAll.bind(this);

this.insert = this.insert.bind(this);

this.update = this.update.bind(this);

this.delete = this.delete.bind(this);

}

async getAll(req, res) {

return res.status(200).send(await this.service.getAll(req.query));

}

async insert(req, res) {

let response = await this.service.insert(req.body);

if (response.error) return res.status(response.statusCode).send(response);

return res.status(201).send(response);

}

async update(req, res) {

const { id } = req.params;

let response = await this.service.update(id, req.body);

return res.status(response.statusCode).send(response);

}

async delete(req, res) {

const { id } = req.params;

let response = await this.service.delete(id);

return res.status(response.statusCode).send(response);

}

}

export default Controller;

Now, create a PostController file under src/controllers:

//src/controllers/PostController.js

import Controller from './Controller';

import PostService from "./../services/PostService";

import Post from "./../models/Post";

const postService = new PostService(

new Post().getInstance()

);

class PostController extends Controller {

constructor(service) {

super(service);

}

}

export default new PostController(postService);

Create The Routes

Create routes for the API.

Under the config folder, create a file routes.js:

//config/routes.js

import PostController from './../src/controllers/PostController';

export default (server) => {

// POST ROUTES

server.get(`/api/post`, PostController.getAll);

server.post(`/api/post`, PostController.insert)

server.put(`/api/post/:id`, PostController.update);

server.delete(`/api/post/:id`, PostController.delete);

}

Now import the routes into the server.js file immediately after the body parser setup:

//config/server.js //... import setRoutes from "./routes"; setRoutes(server); //...

Make a POST request for the route/API/post with the following JSON body:

{

"title": "post 1",

"subtitle": "subtitle post 1",

"content": "content post 1"

}

The result would be like this:

{

"error": false,

"item": {

"_id": "5dbdea2e188d860cf3bd07d1",

"title": "post 1",

"subtitle": "subtitle post 1",

"content": "content post 1",

"createdAt": "2019-11-02T20:42:22.339Z",

"updatedAt": "2019-11-02T20:42:22.339Z",

"slug": "post_1",

"__v": 0

}

}

Conclusion

The development of a Node.js application comes with its own set of challenges. Your aim should be to create a neat and reusable code.

This information about it and the rules will guide you in the right direction. It will help you in using the Node JS Application architecture effectively and also enable you to use the right practices to support the architecture.

We hope you had a great experience reading this article and it proves to be of great value for any Node.js Development Company. Thank You.!

-

Is Node JS good for Microservices architecture?

Using Node. js for microservices architecture is a great choice because it is a lightweight technology tool. It allows for better performance and speed of application.

-

What is Node JS good for?

Node. js is single-threaded in nature so it is used for event-driven servers and non-blocking. It's used for traditional web sites and back-end API services.

-

Who uses node JS?

Netflix, the world's leading Internet television network is one of those top companies that trusted its servers to Node. js. Other than that, WALMART, UBER, PAYPAL, LINKEDIN, MEDIUM, etc uses node JS.

-

Does node js have a future?

Hence we can say that Node JS is a futuristic technology. Node JS is adopted by many of the topmost Node JS web development companies