For all the coding geeks out there, Node JS Stream seems to be a hot topic nowadays. So, by this, the demand for hiring node js developers is also increased and they using Node.js Streams for developing their applications, websites, and servers.

But people new to the environment of full-stack development are confused about the hype of Node JS. So, here is a quick guide to it!

First of all, Node.js stands for Node JavaScript. The definition given by the developers of Node.js goes like- “Node.Js is a JavaScript runtime environment built using the chrome’s V8 Script engine”.

So, it is not a language instead it is a whole new platform that provides better efficiency than the former versions.

Types Of Streams In Node.js

Though streams are part of Node.js environment, it has some categories too. For every kind of function, we have a unique stream.

In Node.js, there are a total of 4 types of JavaScript Streams based on their functionality:

- Writable– This Node JS Stream allows you to write data into a file. We use this stream while sending any data to the client. You can simply create a writable stream using fs.createWriteStream().

- Readable-Readable Node Streams are used to read the data from a file. It simply means extracting data from a file or receiving some requests from the client end. To do so, you can use fs.createReadStream().

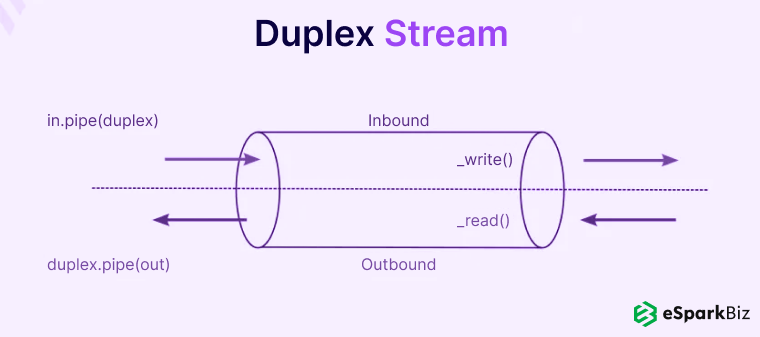

- Duplex-When both read and write functions need to be used together, we use the duplex streams. This Stream on Node can be created with the help of net.socket(), etc.

- Transform-Transform streams are used in cases where the transformation of data is required. One of the applications of a transform stream involves compression. It allows the stream to write data into a compressed file and read the decompressed data of a file.

Here is a list of the most commonly used streams:

- fs read streams

- TCP Sockets

- fs write streams

- stdout

- zlib streams

- stderr

- stdin, etc.

Some kinds of streams even serve multiple purposes based upon their use.

For example, the http response stream acts as a readable stream on the client-side and writable stream on the server side respectively. Similarly, one can work on both readable and writable streams under anfs module.

An important thing called asynchronous iterators to come into a picture while dealing with streams. The asynchronous nature of the Node.js accounts for fast processing. It allows the network to receive and process multiple requests without blocking the I/O.

Hence, when we make use of the streams that transfer data via the bit-by-bit method, the inclusion of asynchronous iterators is highly recommended. However, one must not mix the async iterators with Event Emitter in case of readable streams.

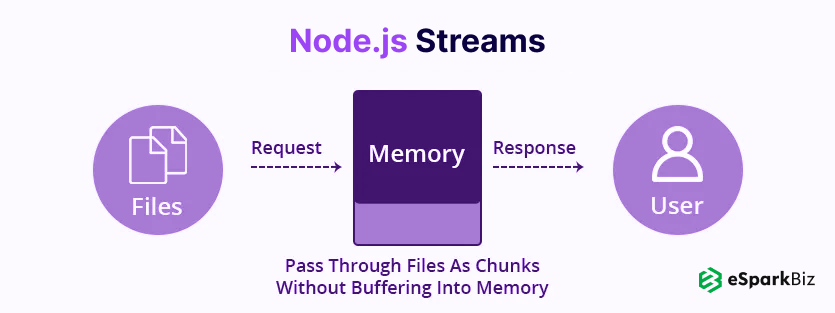

Working Of Node.js Stream

The idea of Node JS Stream is based on a simple solution. Let’s say you are asked to carry a box full of apples from farm to store for selling. There are two ways of doing so.

According to the first way, you can try to lift the heavy big box and walk towards the store. Whereas the second method involves transferring a few apples from the farm to store one by one.

When viewed from the point of profit, the second way is more efficient. Because it fulfills a continuous demand and saves time.

This will make sure that no customer goes barehanded from your store. On the other hand, the first way will take more time. Meanwhile, the store may lose some customers too. This is how the Node.js Streams benefit the application.

The Stream on Node acts as a channel that allows the transfer of small chunks of data. It facilitates the continuous flow of data without waiting for the whole input to get read. This saves the time wasted on reading the full string of data.

A Practical Example of Node JS Stream

To make you understand the concept of Node.js Streams, we have mentioned some of the basic examples. This will help you get a good head start in designing streams of your own choice.

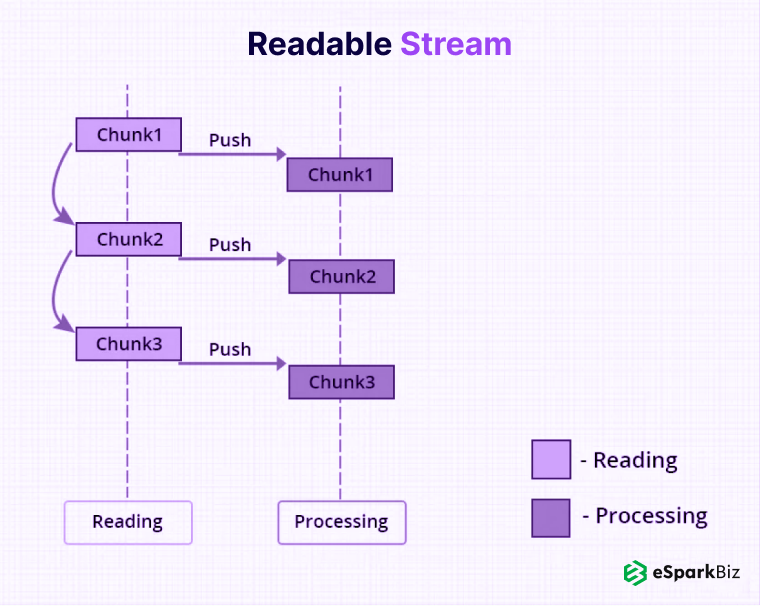

How To Create A Readable Stream?

Let us start with the most basic stream i.e. readable stream. To begin with, you need to initialize a readable stream. This can be done as follows:

const Stream = require("stream")

const redableStream = new Stream.Readable()

//Apart from this, you can even use the fs module.

const fs = require('fs');

const readableStream = fs.createReadStream('./article.md', {

highWaterMark: 10

});

Here, .createReadStreamhas created a readable stream of a buffer size 10. Thus, the stream will read the file in the form of small chunks containing 10 characters each. After this, you can add the following code to extract the output.

readableStream.on('readable', () => {

process.stdout.write(`[${readableStream.read()}]`);

});

readableStream.on('end', () => {

console.log('DONE');

});

According to the initially mentioned importance of async iterators, let us create a readable stream using async iterator as follows:

import * as fs from 'fs';

async function logChunks(readable) {

for await (const chunk of readable) {

console.log(chunk);

}

}

const readable = fs.createReadStream(

'tmp/test.txt', {encoding: 'utf8'});

logChunks(readable);

// Output:

// 'This is a test!n'

Instead of simply reading the data, you can even store the readable data into a string as follows:

import {Readable} from 'stream';

import * as assert from "assert";

async function readableToString2(readable) {

let result = '';

for await (const chunk of readable) {

result += chunk;

}

return result;

}

const readable = Readable.from('test file', {encoding: 'utf8'});

assert.equal(await readableToString2(readable), 'test file');

Modes Of Readable Stream

In addition to how we use the readable stream, it is essential to be aware of its modes. There are simply two modes in which a readable stream runs:

- Pause Mode- Function is being called numerous times until all the chunks of data have been read.

- Flowing Mode- The data is immediately transferred in small chunks so that event emitter decides the amount of data to be read in one chunk

An example of pause mode is given below:

var fs = require("fs");

var newreadableStream = fs.createReadStream("text.txt");

var data = "";

var chunk;

newreadableStream.on("readable", function () {

while ((chunk = newreadableStream.read()) != null) {

data += chunk;

}

});

newreadableStream.on("end", function () {

console.log(data);

});

The condition on the while loop is such that the data is being read in each iteration until the end of the file is reached. However, the readable event is emitted for every chunk of data being read.

When a readable stream is executed in flowing mode, the system emits some event from each chunk of data processed. An example script of readable stream in flowing mode is given below:

var fs = require("fs");

var data = '';

var myreaderStream = fs.createReadStream('textfile.txt'); //Create a readable stream

myreaderStream.setEncoding('UTF8'); // Set the encoding to be utf8.

// Manage stream events like data, end, and error

myreaderStream.on('data', function(chunk) {

data += chunk;

});

Here, by calling the .createReadStream we create a readable stream. This reads data from the assigned file ‘textfile.txt’. Upon the calling of data events, the stream starts flowing.

It then reads the data in the form of chunks and emits it accordingly. The next part of the script handles the error and end part as follows:

myreaderStream.on("end", function () {

console.log(data);

});

myreaderStream.on("error", function (err) {

console.log(err.stack);

});

console.log("Program Ended");

Understanding The Execution

When executed, the stream reads the data in the form of small bits and emits it sequentially. You can even choose the amount of data emitted after reading. We have also mentioned the end and error objects that indicate the status of the program.

The stream will emit the end event when no chunks of data have been left for execution. Similarly, if the console does not read the file due to internal error, it emits an error event to indicate the failure of stream execution.

You need to be a little careful while working with different modes of readable streams. Because in node.js, all the readable streams are initiated in the paused mode by default.

Thus, in the case of flowing mode, you need to do some modifications to the existing stream.

Here, we have enlisted some of the possible ways to convert the paused mode into flowing mode and vice-versa by using the:

- Calling a data event handler

- If there are no pipe destinations, the flowing mode can be changed to pause mode by using stream.pause method.

- For streams containing pipe destinations, the model can be changed by removing the pipe destinations. This is done by using the stream.unpipe()method.

Read More : Guide to Long Polling in Node.js Framework

Events Related To Readable Stream

Until now, you might have understood the concept and modes of the readable stream. Now is the turn to revise some of the important events involved in a readable stream.

- data– It is a buffer object that emits every time a chunk of data is being read. You can even change Emitted when a chunk of data is read from the stream.

- close– This event is emitted when the entire stream has been read. It also indicates the end of events.

- readable– The console emits this object when data is available in the stream for reading.

- end– As evident from its name, this object is emitted when all the data has been read from the stream. i.e. it marks the end of the reading process.

- error– Whenever a node.js encounters any obstacle in reading the stream, it emits an error.

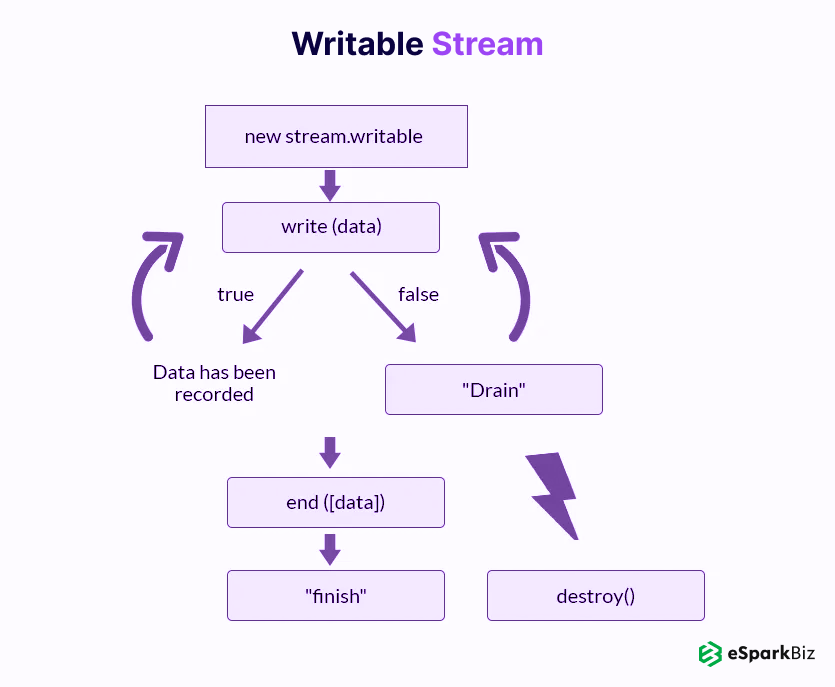

How To Create A Writable Stream?

The function used from creating a writable stream lies in its name itself i.e. write(). With the use of this, you can transfer the contents of a file to the browser in the form of chunks.

This can be understood by the following example which begins by using the ‘fs’ module of node.js :

var fs = require("fs");

var myreadableStream = fs.createReadStream("1doc.text");

var mywritableStream = fs.createWriteStream("2doc.txt");

myreadableStream.setEncoding("utf8");

myreadableStream.on("data", function (chunk) {

mywritableStream.write(chunk);

});

To make the above process more particular, you can add an endpoint to avoid extra writing of data. For this, you can make use of the writable.end method.

This method coupled with the callback function marks the finishing of the event.

// Write 'start, ' and then end with ‘finish!'.

const fs = require("fs");

const file = fs.createWriteStream("textfile.txt");

file.write("start, ");

file.end("finish!");

// You cannot write more!

Kindly note here that the readable and writable streams can be used together to perform dual functions. This usually happens in the case when we want to read some data from a writable stream or vice-versa.

In the code below, Stream. Readable read the chunks from file and then imports it to the mywritableStream.

const Stream = require("stream");

const myreadableStream = new Stream.Readable({

read() {

this.push("ping!");

this.push("pong!");

},

});

const mywritableStream = new Stream.Writable();

mywritableStream._write = (chunk, encoding, next) => {

console.log(chunk.toString());

next();

};

myreadableStream.pipe(mywritableStream);

mywritableStream.end();

Events Related To Writable Stream

Similar to readable streams, we have another summary of events for writable streams also.

- close– This close event also serves the same purpose as that in the case of a readable stream. It is emitted when all the data from the stream has been used and no more events are left for execution.

- finish– The console emits finish event when all the data has been processed from the writable stream.

- pipe– Pipe emits whenever any writable stream is piped into a readable stream.

- unpipe– The emission of unpipe states that a writable stream is un-piped from a readable stream.

- error– During the execution of a writable stream, the system emits an error if there comes any obstacle. This can be a result of internal issues or improper flushing of data.

The Stream Module

The Node Stream module acts as a foundation in node.js that handles the streaming of data. It is present in the node.js by default and manages all the events asynchronously.

The module is being called:

const stream = require('stream');

In the case of Http servers, there is no such need of including the stream module because they return the readable or writable objects on their own.

Stream Powered Node APIs

Following is a list of in-built Node Streams modules that can handle the stream capabilities efficiently:

- stdout– It returns the stream linked to the stdout.

- stderr– It returns the stream connected to the stderr.

- stdin– It returns the stream connected to the stdin function.

- createGzip()– It compresses the data using gzip into a stream.

- createGunzip()– It is used to decompress gzip streams.

- createDeflate()– It compresses data of a file into a stream with the use of a deflating function.

- connect()– It is used to initiate stream-based connections.

- request– It returns a writable stream of the http.ClientRequest class.

- createReadStream()– Used to create readable streams into files.

- createWriteStream()– Used to create a writable stream in a file.

- createInflate()– Used to decompress deflate streams.

- Socket– is the main node API that consists of all the above mentioned APIs.

The Pipe Method

There are cases when the output from a readable stream might be required as an input to a writable stream or vice-versa. This is the point where the pipe method finds application.

The pipe method basically involves sending data from one stream to another without consuming the streams with events.

Before we get into the Node.js Pipe method, let us first take a look at a code that makes use of events for transferring data from one stream to another:

var fs = require("fs");

var myReadStream = fs.createReadStream(__dirname + "/readMe.txt", "utf8");

var myWriteStream = fs.createWriteStream(__dirname + "/writeMe.txt");

myReadStream.on("data", function (chunk) {

console.log("new chunk received");

myWriteStream.write(chunk);

});

In the above code, we extract data from the read stream and then transfer each processed chunk to the write stream. Whereas all of this can be done in a more simplified way with the use of pipes as mentioned below:

var fs = require("fs");

var myReadStream = fs.createReadStream(__dirname + "/readMe.txt", "utf8");

var myWriteStream = fs.createWriteStream(__dirname + "/writeMe.txt");

myReadStream.pipe(myWriteStream);

In this case, the data from the read stream is piped into the write stream. Both of the methods yield the same result. The pipe method becomes even more useful when the data needs to be transferred to the client as illustrated in the following example:

var http = require("http");

var fs = require("fs");

var server = http.createServer(function (req, res) {

console.log("request was made: " + req.url);

res.writeHead(200, { "Content-Type": "text/plain" });

var myReadStream = fs.createReadStream(__dirname + "/readMe.txt", "utf8");

var myWriteStream = fs.createWriteStream(__dirname + "/writeMe.txt");

myReadStream.pipe(res);

});

server.listen(3000, "127.0.0.1");

console.log("connecting to port number 3000");

Node.js’s Built-In Transform Stream

The concept of transform stream may seem a bit tough. This is why node.js itself has multiple built-in transform streams such as ascrypto and Zlib streams, etc.

Let us first take a look at an example of a zlib stream. We will use zlib.createGzip() stream that compresses data into the stream.

In addition to this, we have included the fs readable/writable streams. All of this together gives rise to a perfect file-compression script:

const fs = require('fs');

const newzlib = require('zlib');

const myfile = process.argv[2];

fs.createReadStream(myfile)

.pipe(newzlib.createGzip())

.pipe(fs.createWriteStream(myfile + '.gz'));

In this code, the gzip decompresses the data of the file passed as an argument into the stream. After that, the content of the readable stream is being piped into the zlib built-in stream. This data is then also transferred into the writable stream for the new gzipped file.

This is the point where pipes come as saviors. They help in tracking the real-time progress of the code in run time.

By adding a small message in between the code, you can track the progress of the script. Also, one can easily combine the events with pipes by linking the event handlers to one another via chaining.

const fs = require('fs');

const myzlib = require('zlib');

const newfile = process.argv[2];

fs.createReadStream(newfile)

.pipe(myzlib.createGzip())

.on('data', () =>process.stdout.write('.'))

.pipe(fs.createWriteStream(newfile + '.zz'))

.on('finish', () => console.log('Done'));

It is evident from the above example that pipes provide us great flexibility to consume streams. However, one can easily customize the interaction between streams with the inclusion of more events.

Customizing The Interaction Between Streams

const fs = require('fs');

const newzlib = require('zlib');

const myfile = process.argv[2];

const { Transform } = require('stream');

const reportProgress = new Transform({

transform(chunk, encoding, callback) {

process.stdout.write('.');

callback(null, chunk);

}

});

fs.createReadStream(myfile)

.pipe(newzlib.createGzip())

.pipe(reportProgress)

.pipe(fs.createWriteStream(myfile + '.zz'))

.on('finish', () => console.log('Done'));

Now, you must have understood that a combination of multiple streams yields great advantages. We can set them as per our preference to perform a sequence of operations.

For example, you can pipe a file into a transform stream before/after its encryption in gzip to get the file in your choice of order. This can also be done by implementing the crypto module:

const crypto = require("crypto");

fs.createReadStream(myfile)

.pipe(newzlib.createGzip())

.pipe(crypto.createCipher("aes192", "a_secret"))

.pipe(reportProgress)

.pipe(fs.createWriteStream(myfile + ".zz"))

.on("finish", () => console.log("Done"));

In order to unzip the zipped files of above code, you can use the opposite streams of zlib and crypto in reverse order as:

fs.createReadStream(myfile)

.pipe(crypto.createDecipher("aes192", "a_secret"))

.pipe(newzlib.createGunzip())

.pipe(reportProgress)

.pipe(fs.createWriteStream(myfile.slice(0, -3)))

.on("finish", () => console.log("Done"));

In the above script, the compressed version of the script file is being piped into a read stream. This again gets passed into the crypto-create decipher stream.

The output of this creates a decipher stream and it is used as an argument of zlib to create a Gunzip stream that ultimately writes the data into the file without extension.

Duplex Stream

After understanding the readable and writable, let us now get into its combination i.e. duplex streams. We will describe an example of a duplex stream that uses the ‘net’ module.

Let us first begin with creating a variable named net assigned to the ‘net’ module.

var net = require("net");

var fs = require("fs");

//After this, create a server using the net module as,

var server = net.createServer(function (connect) {

var log = fs.createWriteStream("hfi.log");

console.log("connection is establish");

connect.on("end", function () {

console.log("connection ended");

});

In the next part of the code, we use write functions followed by a pipe().

connect.write(“Welcome to Heathrow Flight Information.rn”); connect.write(“We all it HFI; the Heathrow Flight Information.rn”); connect.write(“We will get your message and display it on the board to passengers.rn”); connect.pipe(connect).pipe(log); });

Now include the server listener for port 7777,

server.listen(7777, function () {

console.log("server is on 7777");

});

Now, execute the script and listen to the server. The output will reflect the statements written under the connect.write functions.

Conclusion

Node.js is all about its streams. These are the only components that make the node platform asynchronous and scalable.

Concepts such as Node.js Pipe, transform streams, stream module, etc are necessary components of node.js. For designing an efficient and interactive application, you must pay special attention to these topics.

Hence, this was a simple and easy-to-understand guide to node.js and Node JS Stream File. We hope it helped you understand the basics of Node.js Streams well.

We hope you had a great time reading this article and it will help any Nodejs Development Company. Thank You.!

-

How To Pause Stream Read Data In Node.js?

Using the readable.pause() method, you will be able to pause stream read data in node.js

-

What Is An Example Of Duplex Stream In Node.js?

A concrete example of a duplex stream is a network socket.

-

Is Node.js Good For Video Streaming?

Oh.! YES, Node.js is a great option of streaming videos and other multimedia due to itas asynchronous nature.